Billionaire entrepreneur, star of ABC reality show “Shark Tank” and owner of the Dallas Mavericks basketball team Mark Cuban is certain that a version of the Terminator is coming.

Speaking to an audience of conservative high school students at the High School Leadership Summit Turning Point USA event in Washington D.C. Tuesday, Cuban laid down several serious warnings about the future of artificial intelligence and among them was a reference to the 1984 movie, "The Terminator," starring Arnold Schwarzenegger as a cyborg assassin.

“Let me scare the s--- out of you, all right. If you don’t think by the time most of you are in your mid-40s that a Terminator will appear, you’re crazy,” Cuban says, as seen on a Facebook live video, embedded below.

Cuban was in conversation with Charlie Kirk, the 24-year-old founder of Turning Point USA, a right-wing non-profit organization aimed at promoting conservative political ideas among high school students.

Cuban does not identify as Republican or Democrat: “I don’t belong to any political party and I never will,” Cuban told Kirk.

Amid a wide-ranging debate about what the scope of government should be, Cuban argued that the government should be involved in funding artificial intelligence research. In November, the billionaire warned that the United States should not allow countries like China and Russia to pull ahead in terms of developing artificial intelligence. China’s government has said publicly it plans to be the global leader in artificial intelligence by 2030 and Russian President Vladimir Putin has said, "the one who becomes the leader in this sphere will be the ruler of the world."

“And our defense organizations are starting to, but as a country, the administration is barely even acknowledging that it is an issue,” Cuban said to Kirk this week about artificial intelligence research in the U.S.

Cuban says the size and importance of the issue of artificial intelligence is such that the government needs to be involved.

“That is an investment that has to come from government," he says. "We can’t do enough independently with independent entrepreneurs because — trust me. I understand AI. And it is bigger than what any individual company can do because Google, Microsoft, etc. aren’t worried about defense applications. They are worried about making more money and they are using it to make more money."

The biggest impediment to a Terminator-like weapon being built currently, according to Cuban, is the lack of sufficient power supplies.

“Now, this may sound crazy for a lot of people, but the idea of autonomous weaponry in a mobile device run by a remote to power device is going to happen,” says Cuban. “I want it to be ours. And I want to be able to know that the government is in position to defend against autonomous weaponry. That has to be done by the government. That’s the same approach we have. Because it is such a big issue.”

Kirk disagreed with Cuban’s perspective that the government should be involved in developing artificial intelligence. Kirk is of the mind that virtually everything ought to be left to a free and open market — in other words, that private companies and researchers should be at the forefront of artificial intelligence development, not the government.

Cuban doubled down. The government should be involved in funding artificial intelligence because of the seriousness and scope of the threat of autonomous weapons.

“The future of warfare is on, built around, through, up and down artificial intelligence. If we don’t win that war, the next generation that are sitting here having this conversation for the next generation of high school kids? It is going to be, how are we going to catch up? That’s why we need to invest,” Cuban says. “I think we are truly at threat from autonomous weapons unless as a nation we either come to agreements with other nations on this — and we have the ability to monitor them, you know, trust but verify — but as big a threat as nuclear is, AI is even bigger.”

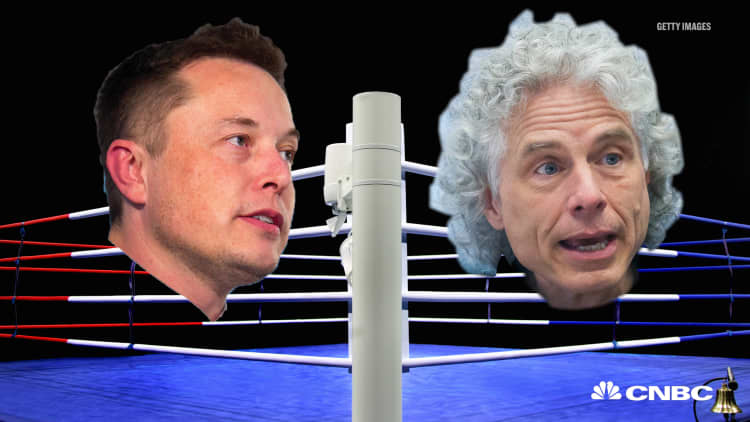

Cuban’s fears are similar in scope to that of another high-profile tech entrepreneur and billionaire: Elon Musk. The Tesla and SpaceX boss has said that artificial intelligence is both “far more dangerous than nukes” and poses “vastly more risk than North Korea.”

Earlier in July, Musk, along with all three of the co-founders of Google’s DeepMind were among the thousands of individuals and almost 200 organizations who publicly committed not to develop, manufacture or use killer robots. They did so by signing a pledge published and organized by the Boston nonprofit Future of Life, an organization that researches the benefits and risks of artificial intelligence along with other existential issues related to advancing technology.

Even as companies pledge not to be involved with killer robots, Cuban says he is sure the technology for autonomous robots will become commonplace.

“We already have the ability to have weapons think," Cuban says. "There is already the ability for autonomous weapons and they are only going to go further, further, further as processors get more advanced. Once we solve the [portable] battery problem, so these Terminators can be out in the field for an extended period of time…. If we don’t win that battle, this world is upside down. That’s what scares the s--- out of me.”

The potential of artificial intelligence to become dangerous is why it is one of a few other select issues the government should be involved in regulating, says Cuban. Other issues the billionaire tech entrepreneur mentioned that should be regulated by the government include health care and climate change.

Artificial intelligence won’t just change the way war is waged. It will change the job market, too, Cuban says.

"Literally, who you work for, how you work, the type of work you do is going to be completely different than your parents within the next 10 to 15 years," Cuban says. "Even if you have no interest in computers, no interest in programming, it doesn’t matter. Just like you laugh at your parents who might or might not understand Snapchat and Instagram and Twitter and the like, you are going to have to understand AI or people are going to laugh at you."

Disclosure: CNBC owns the exclusive off-network cable rights to "Shark Tank."

See also:

Like this story? Subscribe to CNBC Make It on YouTube!