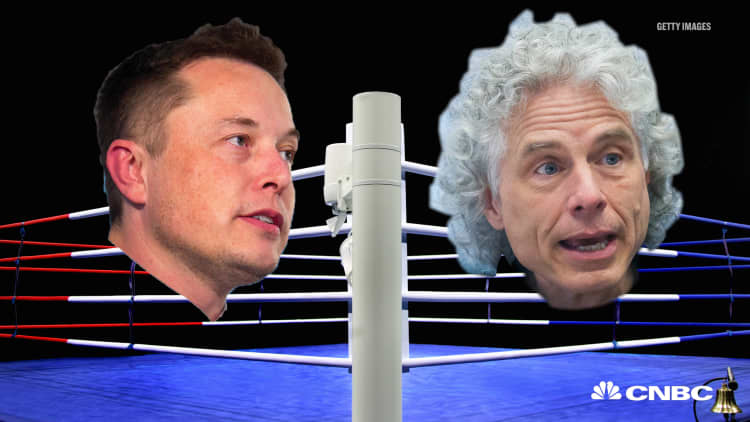

Psychologist Steven Pinker has a PhD from Harvard and is a professor there. He's also taught at Stanford and MIT. He's published 10 books and is a member of the National Academy of Sciences. He's been a finalist for the Pulitzer Prize twice. He's got nine honorary doctorates, was listed as one of the "100 Most Influential People in the World Today" by Time and is the chair of the American Heritage Dictionary usage panel.

In sum, Pinker is widely regarded as a brilliant, accomplished man.

And that's why billionaire tech titan Elon Musk says he is so disturbed by what he sees as Pinker's lack of understanding of artificial intelligence. If Pinker doesn't have a grasp of AI, "humanity is in deep trouble," Musk says on Twitter.

The tweet was in response to a story about Pinker's appearance on the podcast, "Geek's Guide to the Galaxy," published in "Wired."

In the episode, Pinker criticizes Musk for sounding alarm bells about the potential of AI, using Teslas' self-driving capabilities as an example of why it's not as threatening as Musk warns.

"If Elon Musk was really serious about the AI threat, he'd stop building those self-driving cars, which are the first kind of advanced AI that we're going to see," says Pinker on the podcast. (Teslas currently have the hardware necessary for fully self-driving capability, but not the software.)

"Now I don't think he stays up at night worrying that someone is going to program into a Tesla, 'Take me to the airport the quickest way possible,' and the car is just going to make a beeline across sidewalks and parks, mowing people down and uprooting trees because that's the way the Tesla interprets the command 'take me by the quickest route possible,'" says Pinker.

"That's just idiotic. You wouldn't build a car that way, because that isn't an example of artificial intelligence," he continues. "Plus, he'd get sued and there'd be reputational harms. You'd test the living daylights out of it before you let it on the streets."

Indeed, Musk has issued very dire warnings about the potential of AI.

He has said robots will be able to do everything better than humans; competition for AI at the national level will cause World War 3; and AI is a greater risk than North Korea.

Pinker's criticism of Musk echoes the sentiment the Harvard professor articulated in an op-ed published Saturday in the Canadian paper, The Globe and Mail. In the piece, Pinker argues one of the doomsday scenarios that is overhyped is the notion that artificial intelligence will be humanity's undoing. Pinker says bemoaning unrealistic apocalyptic scenarios is dangerous to society.

Musk, however, says the difference between machine intelligence used for a specific use case, like driving a car, versus more generalized machine intelligence, is massive. And he is shocked Pinker would argue otherwise.

"Wow, if even Pinker doesn't understand the difference between functional/narrow AI (eg. car) and general AI, when the latter *literally* has a million times more compute power and an open-ended utility function, humanity is in deep trouble," Musk says.

Musk believes that general AI, unregulated, has great potential for doing harm.

"I have exposure to the most cutting edge AI, and I think people should be really concerned by it," says Musk speaking to the National Governors Association in July.

"AI is a fundamental risk to the existence of human civilization in a way that car accidents, airplane crashes, faulty drugs or bad food were not — they were harmful to a set of individuals within society, of course, but they were not harmful to society as a whole."

In particular, says Musk, artificial intelligence could start a war.

"The thing that is the most dangerous — and it is the hardest to ... get your arms around because it is not a physical thing — is a deep intelligence in the network.

"You say, 'What harm can a deep intelligence in the network do?' Well, it can start a war by doing fake news and spoofing email accounts and doing fake press releases and by manipulating information," Musk says to the bipartisan gathering of U.S. governors.

Musk recommends the government be proactive about regulating the industry.